Researcher

Aidan Hinphy

Faculty Advisors

Dr. Zenyu Cui

Abstract

This study explores the use of machine learning algorithms to predict the direction of daily West Texas Intermediate (WTI) crude oil prices, aiming to develop a trading strategy that outperforms traditional methods. It employs various models including Random Forest, K-Nearest Neighbor (KNN), Recurrent Neural Network (RNN), Multilayer Perceptron (MLP), and Long Short-Term Memory (LSTM), using a dataset of daily WTI prices, commodities, and economic indicators. The study finds that deep learning models, especially LSTM, surpass other models in accuracy and trading profitability. It concludes that the LSTM model and proper feature selection are key to effective crude oil price prediction, highlighting the superiority of deep learning over ensemble methods in this context.

Introduction

This study assesses the effectiveness of machine learning algorithms in predicting West Texas Intermediate (WTI) crude oil prices, a challenging task due to the market's complexity influenced by various factors like supply-demand dynamics, geopolitical events, and natural conditions. The research utilizes machine learning models—Random Forest, K-Nearest Neighbor (KNN), Recurrent Neural Network (RNN), Multilayer Perceptron (MLP), and Long Short-Term Memory (LSTM)—to analyze a dataset from 2001 to 2022, including daily prices and macroeconomic variables. The focus is on evaluating the predictive accuracy of these models and the significance of WTI time series data in forecasting returns. This research aims to provide valuable insights for investors and traders in developing effective trading strategies in the crude oil market by identifying the most efficient predictive techniques. The study also contributes to the literature on machine learning applications in financial forecasting.

Methodology

The methodology of the study involves using a dataset of daily WTI crude oil prices and other economic variables from 2001 to 2022 to predict WTI price direction. Five machine learning models—Random Forest, KNN, RNN, MLP, and LSTM—are evaluated. The study employs feature selection methods like SelectKBest, Recursive Feature Elimination, and ExtraTreesClassifier to identify key variables. Hyperparameter tuning is conducted using TimeSeriesSplit and GridsearchCV. The study also makes adjustments to initial modeling strategies to improve accuracy, such as data timeframe modification, training and test set resizing, and class distribution balancing. The performance of each model is assessed based on the accuracy of predictions.

Results

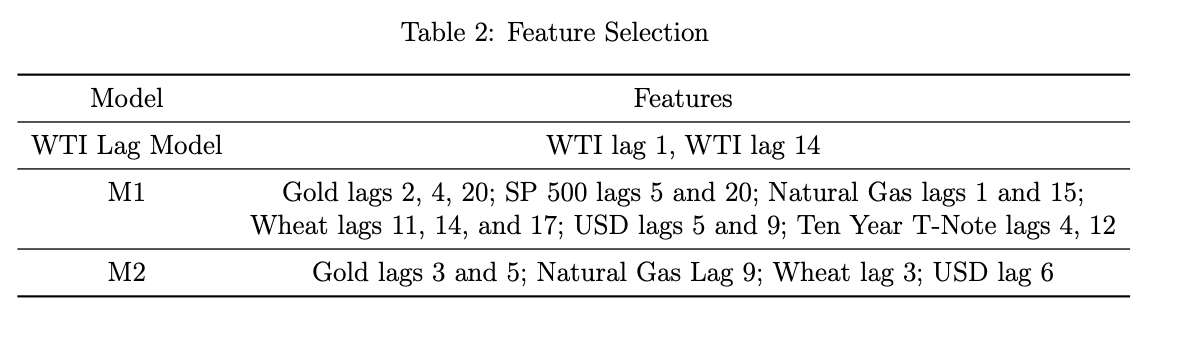

Feature Selection

In the WTI Lag Model, lags 1 and 14 were identified as key predictors of WTI price direction using three feature selection methods. For model M1, significant lags for predicting WTI price direction were found across various exogenous variables: lags 2, 4, and 20 for Gold; 5 and 20 for SP 500; 1 and 15 for Natural Gas; 11, 14, and 17 for Wheat; 5 and 9 for the Dollar Index; and 4, 12, and 16 for the Ten Year T-Note. Model M2's analysis highlighted the combined importance of Gold lags 3 and 5, Natural Gas lag 9, Wheat lag 3, and USD lag 6 in forecasting WTI price direction, focusing on lags 1 through 10 from grouped exogenous variables. Table shows the features selected for the 3 sets of predictions.

Baseline Model

The individual opted for logistic regression as the baseline model, recognizing its simplicity and effectiveness for binary classification tasks. This choice was driven by the model's ease of interpretation and implementation, making it an ideal starting point for exploring more complex algorithms. Without adjusting hyperparameters, the default settings from the scikit-learn library were used, along with setting the class weight to "balanced" to mitigate class imbalance by adjusting weights according to class frequencies. However, the logistic regression model struggled with predicting negative price directions, achieving accuracy scores around 50%, as detailed in Table 6.

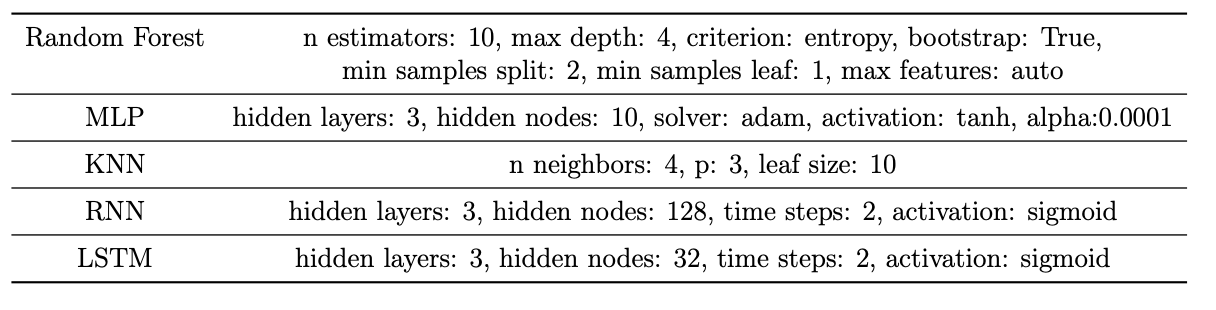

Hyperparameter Tuning

Hyperparameter tuning was crucial for enhancing a machine learning model's accuracy, especially for a time series dataset. The researcher employed TimeSeriesSplit cross-validation with GridSearchCV, ensuring the chronological order of data was preserved for realistic training and testing. TimeSeriesSplit was set to five splits, each with three years of training data and one year for testing, to maintain the sequence integrity. GridSearchCV facilitated an exhaustive search through predefined hyperparameter grids to find the optimal settings, improving the model's dataset specificity. This combination allowed for a precise optimization of the model, evidenced by the optimal parameters listed in Table below for the WTI Lag Model, and provided a robust evaluation over time-separated datasets.

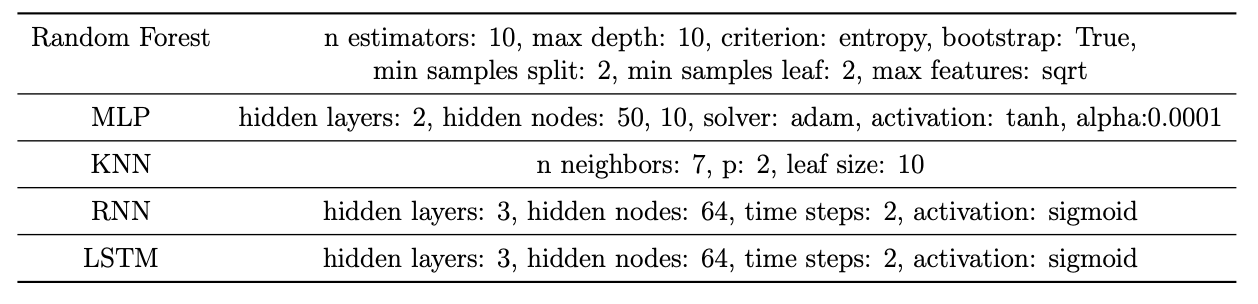

Following table shows the optiomal parameters selected for M1

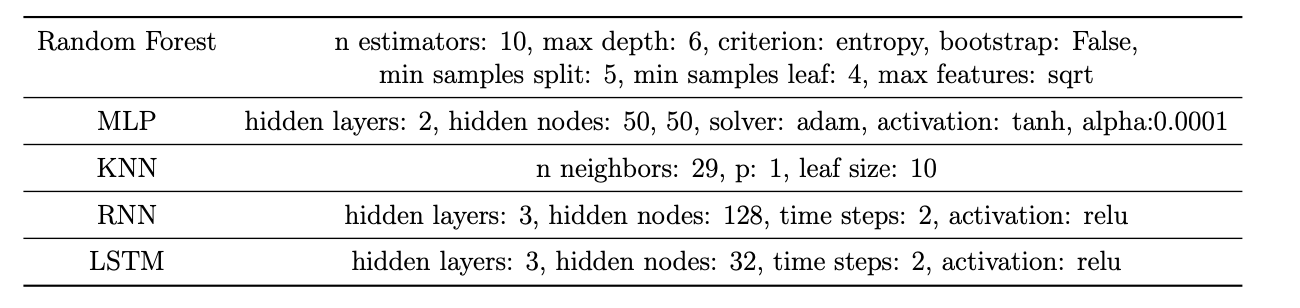

Following table shows optimal parameters selected for M2

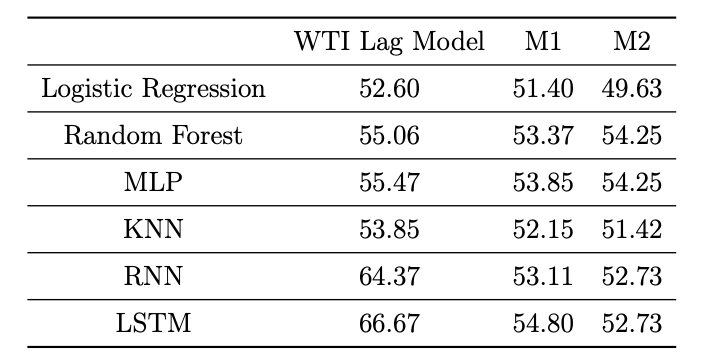

Model Accuracy

Determining the accuracy score is a critical step in assessing a machine learning model's performance, reflecting the proportion of correct predictions it makes. For classification tasks, this involves comparing the model's predicted labels against the actual labels of a test dataset. The discussed models underwent training using data spanning from January 1, 2019, to December 31, 2021, with predictions focused on the year 2022. A higher accuracy score indicates superior model performance, calculated as the ratio of correct predictions to the total predictions made. Following table shows accuracy scores for all of the models

The evaluation revealed that the WTI Lag Model, especially when incorporating RNN and LSTM algorithms, offered superior accuracy in capturing time series patterns. M1 generally outperformed M2, indicating its better grasp of price direction trends, though both models suggested room for improvement and a need for enhanced feature engineering.

LSTM emerged as the standout model, excelling in the WTI Lag Model and M1 by adeptly identifying long-term dependencies for accurate predictions. In contrast, the Random Forest model showed the best results for M2, highlighting the importance of choosing the right model for each dataset's specific characteristics.

Overall, the findings point towards significant potential for increasing accuracy in machine learning models applied to time series data. Future efforts should focus on further refining these models, particularly RNN and LSTM, to improve prediction accuracy and provide deeper insights into data trends.

Investment Strategy

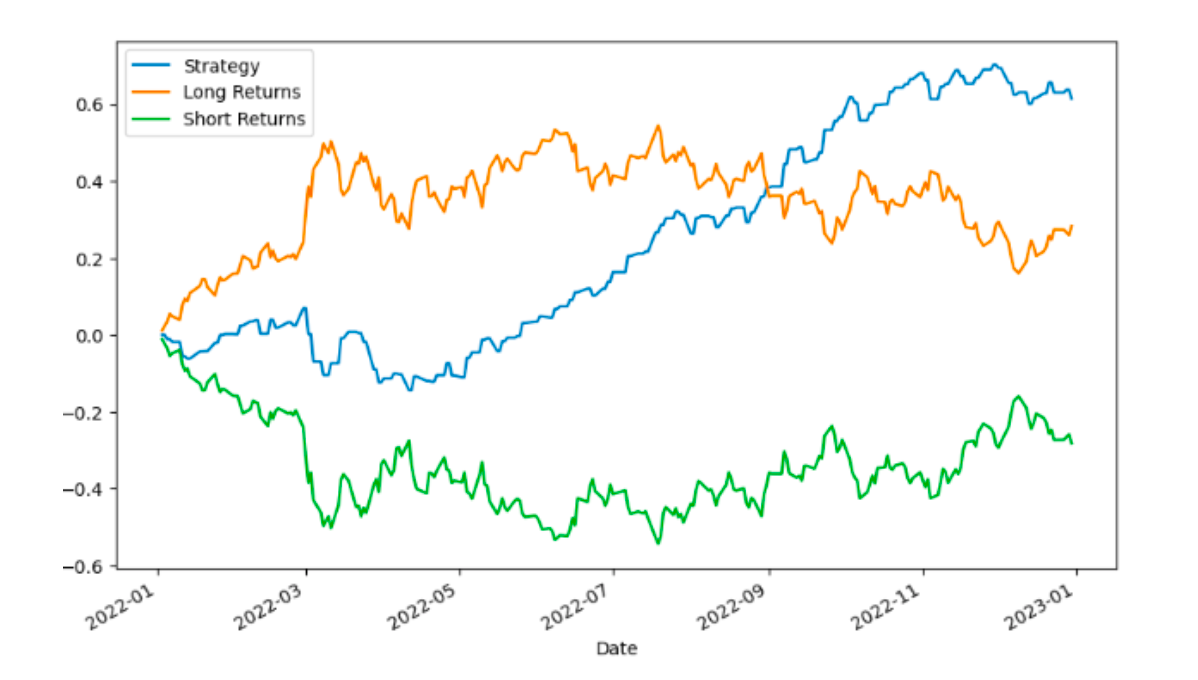

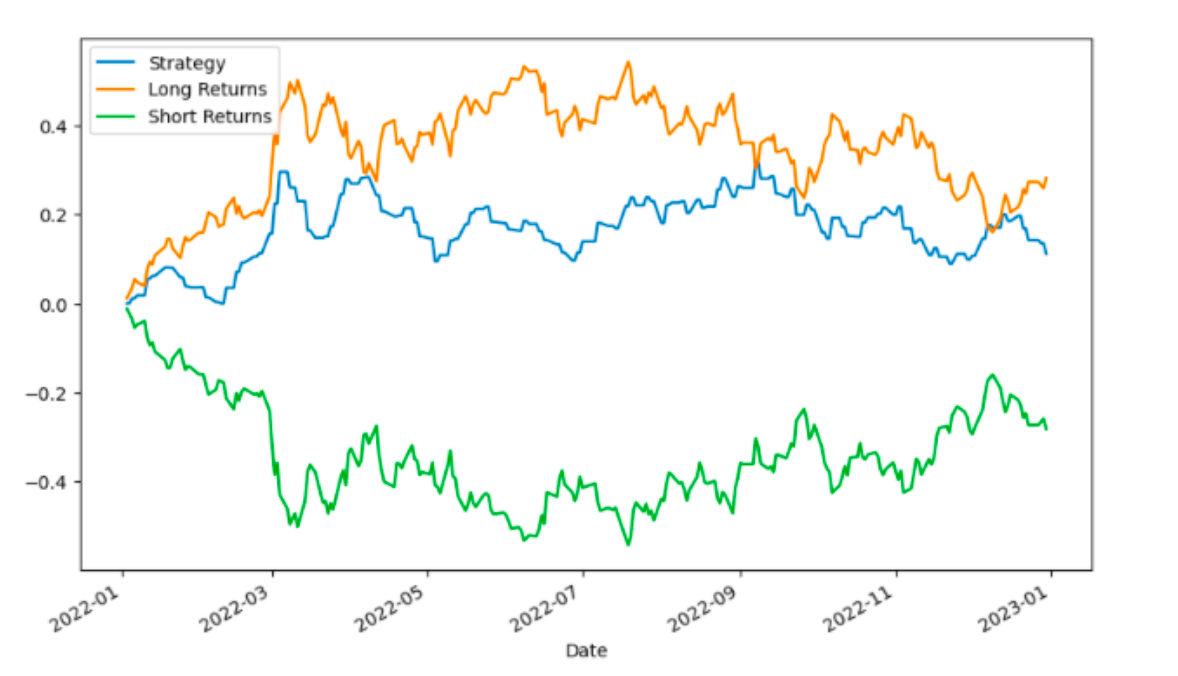

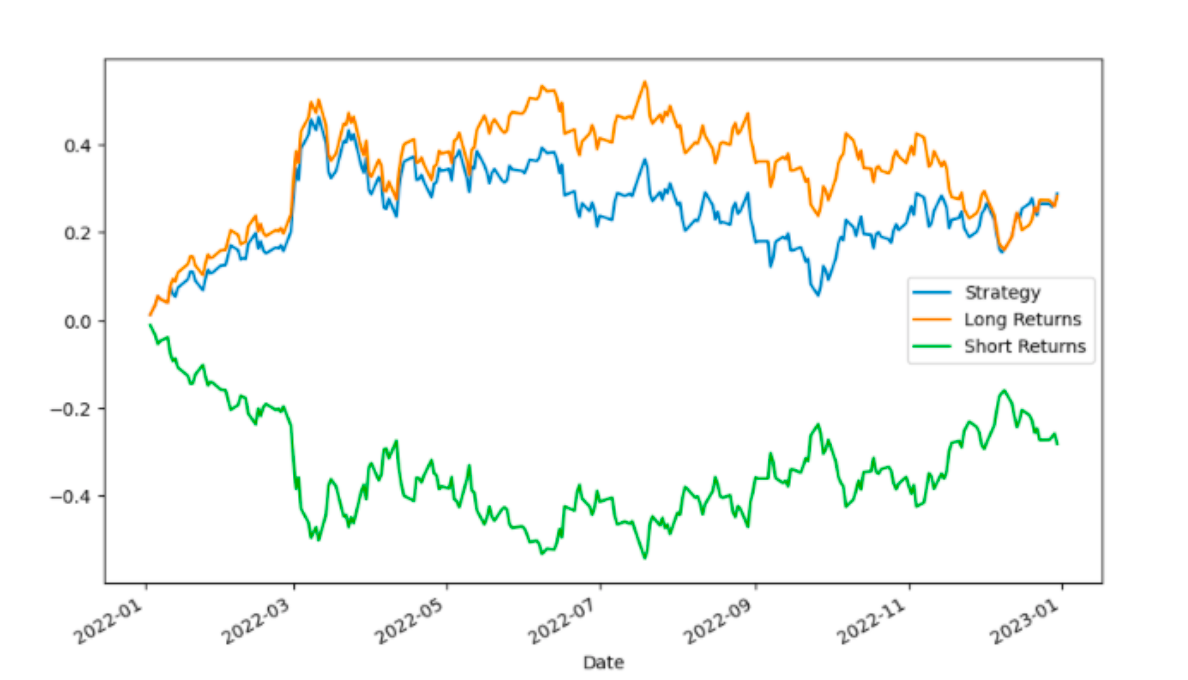

Following an evaluation of various machine learning models, the top performers were selected to guide an investment strategy based on their forecasts. The strategy, informed by predictions from the WTI Lag Model (LSTM), M1 (LSTM), and M2 (Random Forest), involved going long on WTI for positive outlooks and short for negative ones. The performance, excluding transaction costs, is detailed in following figures, illustrating the strategy's efficacy against both long and short WTI benchmarks.

Following figure shows the WTI Lag Model LSTM initially faced difficulties in early 2022 due to rising WTI prices. However, starting May 2022, it significantly outperformed the Long and Short WTI strategies, resulting in a 40 percent and 90 percent upside, respectively.

In contrast, the M1 LSTM strategy, as depicted in figure below, underperformed, mirroring the Long strategy with lower risk and consequently, lesser returns—marking a 10 percent decrease from the Long strategy and a 40 percent gain over the Short strategy.

Meanwhile, the M2 Random Forest strategy closely aligned with the Long strategy without offering additional benefits, as shown in figure below, achieving a 50 percent improvement from the Short strategy but no gain from the Long strategy. Among these, the WTI Lag Model LSTM emerged as the most effective, recommending its exclusive implementation and further refinement to enhance its predictive accuracy and strategic value. This analysis underscores the viability of employing machine learning for investment decision-making, despite not considering trading costs.

Conclusion

The study explored the feasibility of forecasting WTI return directions using various models, finding RNN and LSTM models notably effective in predicting price movements, while others hovered around 50% accuracy. This underscores the potential of deep learning for enhancing forecasting models. The research also showed that an investment strategy based on the WTI LSTM model's predictions could be viable, unlike strategies derived from M1 and M2 models. RNN and LSTM emerged as superior in predicting WTI returns, suggesting deep learning as a robust alternative to traditional models for financial time series. Additionally, WTI time series data proved more valuable than exogenous variables for predictions, indicating a focus on time series and deep learning could enhance forecast accuracy and profitability. Future research could improve prediction accuracy through more extensive feature engineering, hyperparameter tuning, and exploring RNN and LSTM model architectures, suggesting deep learning's significant potential in financial forecasting.